(Note: this guide is written with course design in mind, but the same steps and principles can and should be applied to evaluation of tools for other capacities, such as tools for research labs or departmental use!)

When building your course and selecting external educational tools (an online textbook, a code submission site, a polling service etc.), it’s important to choose products that ensure universal access for all students.

Sometimes, though, it may be difficult to find an option that is accessible which also fulfills the specialized needs of a course—let alone to evaluate on your own whether a tool is accessible (assuming the tool isn’t currently required to go through UCLA’s vetting process for products above a certain price threshold).

Here, we provide guidance on evaluating and pre-vetting potential course tools, which will help us streamline and expedite the process of determining a tool’s accessibility.

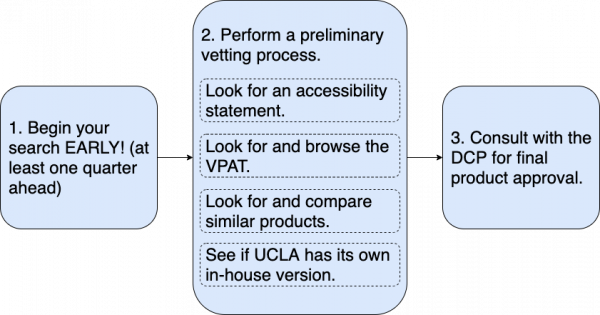

1. When conducting your search and selection of course tools, begin your search early!!

Faculty should find and evaluate tools at least a quarter in advance of when your course is actually set to begin. Doing so allows ample time for a vetting process—and to find a new tool in case the DCP finds that the selected tool is inaccessible. Selecting a new tool a couple days before a quarter starts, only to find out in the first two weeks that it’s inaccessible for a student, would be an unfortunate situation—both for the student’s learning, and because of the legal responsibility UCLA has to provide equal access to education.

2. Do a preliminary accessibility check of products, and look for multiple options (including any in-house options from UCLA).

(Note: this process should only be a vetting process for eliminating clearly inaccessible products, not for self-approving products.)

See below for more guidance on looking at multiple product options, accessibility statements, and Voluntary Product Accessibility Templates (VPATs):

Search for similar products, and evaluate multiple products against each other.

For example, if you’re considering using a polling service, compare products like PollEverywhere, Slido, and Mentimeter, and compare their accessibility statements and VPAT’s to see if any are clearly inaccessible.

Look for tools already created or provided by UCLA.

These tools may already be fully accessible (and/or quicker for the DCP to evaluate). For example, UCLA already has an Online Polling Tool available, which was developed in-house and is free to use.

Look for an accessibility statement.

Do they have one? If so, what does it mention? Are they general and vague in their statements, or are they specific about types of support and their next steps for improvement?

- “Orange” flags: “ADA compliant/compliance”, “striving to be compliant”, “aims to conform”, general vagueness

- “Yellow” flags (or more promising phrases): “WCAG 2.0,” “WCAG 2.0 AA,” “we are compliant” (instead of “trying” or “aiming” to be).

See if the product has a Voluntary Product Accessibility Template (VPAT).

Was the VPAT completed by the vendor, or by a third party accessibility consulting service? How many criteria does the product “Support” or only “Partially support,” and does the VPAT include descriptions or workarounds in the comments for each criteria? (Note: sometimes a VPAT may not be publicly displayed, but it may be available if requested from the vendor.)

- “Orange” flags: VPAT completed by the vendors themselves; VPAT’s with little or no commentary for each piece of criteria

- “Yellow” flags: VPAT completed by a third party of accessibility experts or accessibility consultants; VPAT with plenty of documentation and commentary for each criteria

Check out the Appendices below for some explanations and example comparisons. (These include “Appendix A: What is “criteria” in a VPAT table? A Quick Explanation,” “Appendix B: Who wrote the VPAT and Why it Matters,” and “Appendix C: Comparing Vendors’ Responses.”)

3. Consult with the DCP about your chosen products and tools.

After eliminating any clearly inaccessible products, contact the Disability and Computing Program (DCP) to have your selected tools/products reviewed and approved or disapproved by our team. When a product may seem promising for accessibility, the best way to ensure a product’s accessibility is to have it tested by our team at the DCP. When contacting the DCP about a product, please include the following information:

- Your name/contact info

- Your department

- Vendor name

- Vendor email

- Vendor website

- Product/tool/software name

- Brief (1 to 2 sentence) description of product/tool and how you plan to use it for your course

- Does the product have front-end use in the course? (yes/no)

- (example: Gradescope, used by students to submit assignments; survey tool, used for participating in surveys)

- If yes:

- Who is the audience for front-end use? (faculty, staff, students, public, or multiple of these groups?)

- How many front-end users are you anticipating?

- Does the product have back-end use in the course? (yes/no)

- (example: Gradescope, used on the back-end by TA’s and instructors for grading assignments; survey tool, used to create surveys)

- If yes:

- Who is the audience for back-end use? (faculty, staff, students, public, or multiple of these groups?)

- How many back-end users are you anticipating?

- Does the product/tool/software require login? (yes/no)

- Does the vendor have an accessibility statement? (yes/no)

- If yes, provide link or copy

- Does the vendor have a VPAT? (yes, public/yes, upon request/no)

- If yes, provide link or copy

- Urgency: (4 weeks/6-8 weeks/3-4 months)

- Note: stating a higher urgency does not guarantee that we will be able to turn around our evaluation in the amount of time requested. This is why early requests, at least a quarter in advance, are key!

By starting your search early, doing a preliminary check or comparison, and consulting with the DCP, you’ll be able to select products that better serve all of your students—without having to face any hectic last-minute adjustments or legal actions if something is deemed inaccessible!

Appendices

Appendix A: What is “criteria” in a VPAT table? A Quick Explanation

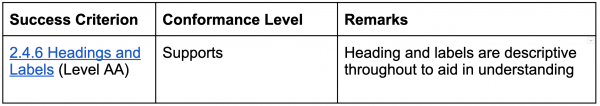

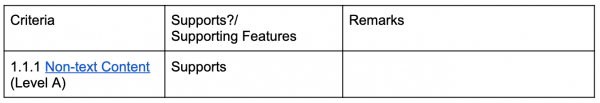

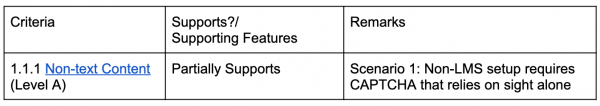

The tables of criteria in a VPAT report includes three columns: “Criteria” or “Success Criterion,” “Conformance Level,” and a “Remarks” section.

“Criteria” or “Success Criterion”: what it sounds like. Criteria based on WCAG accessibility guidelines, usually from WCAG 2.0 or 2.1 Success Criteria.

“Conformance level”: the degree to which a product fulfills the criteria. Possible responses are “Supports,” “Partially Supports,” “Does not Support,” “Not Applicable,” or “Not Evaluated.”

Remarks: this is where the report writers can (and should!) include additional commentary on how a product conforms to the given criteria, in which ways the product does not conform, possible workarounds, and more.

Appendix B: Who wrote the VPAT and Why it Matters

Evaluating the accessibility of a tool or product requires specialized technical expertise, which is why it’s recommended for vendors to contract third parties to complete their VPAT forms. Some vendors may have completed theirs in-house, but these assessments are more likely to miss crucial accessibility issues because of a lack of accessibility expertise.

Here is an example of a VPAT completed by a third party:

Perusall's VPAT from December 2020 has been clearly and explicitly completed by a third party Accessibility Consultant company, as documented on the first page. Every single criteria in the VPAT includes descriptive comments, whether the criteria is “Supported,” “Partially supported,” or is “Not Applicable.” (“Does not Support” is also a possible evaluation, though Perusall is accessible enough that all applicable criteria are at least partially supported.)

In contrast, here is an example of a VPAT that appears to have been completed by the vendor:

Poll Everywhere’s VPAT from 2020 does not mention any third party reviewers or consultants anywhere, and appears to have been completed in-house by the vendors themselves. While some criteria include some commentary in the commentary column, many criteria do not have additional commentary or descriptions.

As described earlier in this guide, it’s better to have a VPAT completed by a third party of accessibility experts, because otherwise important functions or inaccessible features may be missed or glossed over. Furthermore, it’s also important to see additional commentary and descriptions for as many criteria as possible (ideally all criteria). Because of these, Perusall’s VPAT is more trustworthy than Poll Everywhere’s VPAT.

Appendix C: Comparing Vendors’ responses in a VPAT

VPATs require comments for each piece of criteria. So if a vendor claims less than full support, but details the issues (especially if they provide workarounds), this is more promising than a vendor who simply claims full support without further documentation. (With this in mind, the most ideal scenario is a VPAT with both as many fully “Supported” criteria as possible and detailed commentary for every criteria!)

In the example below, this vendor claims full support, but offers no documentation:

This vendor claims less than full support, but details the issue:

Even if a vendor claims full support, their report is not very useful or trustworthy when it has almost no commentary. The second example has more honest reports of support in addition to detailed commentary, making it the better choice between the two.

The best way to ensure accessibility, though, is through product testing by trusted accessibility experts, such as our team at the DCP. This way, we can ensure through first-hand testing that your chosen product is accessible to UCLA’s standards and ready to be used in a UCLA course.

(basis for example: Minnesota’s VPAT Evaluation Scoring guide)

(VPAT table examples taken from or modified from Perusall's VPAT from December 2020)